Deploying a slurm cluster on HPC

Prerequisite

In this guide, we will need:

-

1 controller node(Ubuntu 18.04.6 LTS)

-

1 worker node(which is the Jetson nano in my case running Ubuntu 18.04.6 LTS)

Setup controller node

- Open the terminal on controller node

- Run this script to install dependencies:

sudo apt update sudo apt upgrade -y sudo apt-get install -y openssh-server munge libmunge2 libmunge-dev slurm-wlm sudo && sudo apt-get clean - Checking if munge run properly:

munge -n | unmunge | grep STATUSIt should have the output like this STATUS: Success (0)

- Check if the key exist in

/etc/munge/munge.key, otherwise, generate a new key:sudo /usr/sbin/mungekey - Setup the correct permissions:

sudo chown -R munge: /etc/munge/ /var/log/munge/ /var/lib/munge/ /run/munge/ sudo chmod 0700 /etc/munge/ /var/log/munge/ /var/lib/munge/ sudo chmod 0755 /run/munge/ sudo chmod 0700 /etc/munge/munge.key sudo chown -R munge: /etc/munge/munge.key - Restart the munge service and configure it to run at startup:

sudo systemctl enable munge sudo systemctl restart munge - Check the status of munge service:

sudo systemctl status munge - Copy the key to the worker:

sudo scp /etc/munge/munge.key ubuntu@<IP of the worker>:/home/ubuntu/munge.key - Use slurm’s handy configuration file generator located at

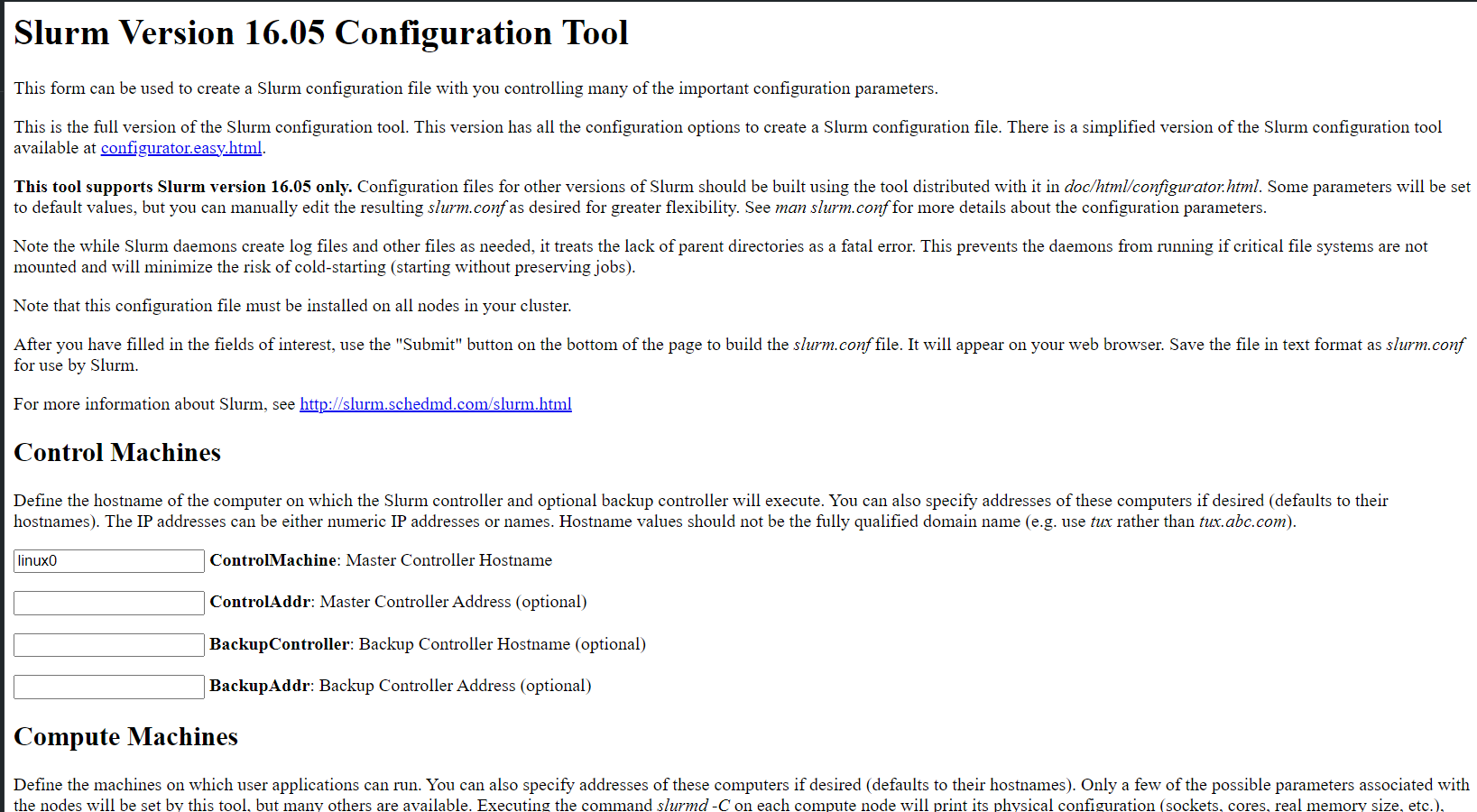

/usr/share/doc/slurmctld/slurm-wlm-configurator.htmlto create your configuration file. You can open the configurator file with your browser. Or using my configuration sample (the below configuration may not fit with your system):

Or using my configuration sample (the below configuration may not fit with your system):

# slurm.conf file generated by configurator.html. # Put this file on all nodes of your cluster. # See the slurm.conf man page for more information. # ControlMachine=controller #ControlAddr= #BackupController= #BackupAddr= # AuthType=auth/munge #CheckpointType=checkpoint/none CryptoType=crypto/munge #DisableRootJobs=NO #EnforcePartLimits=NO #Epilog= #EpilogSlurmctld= #FirstJobId=1 #MaxJobId=999999 #GresTypes= #GroupUpdateForce=0 #GroupUpdateTime=600 #JobCheckpointDir=/var/lib/slurm-llnl/checkpoint #JobCredentialPrivateKey= #JobCredentialPublicCertificate= #JobFileAppend=0 #JobRequeue=1 #JobSubmitPlugins=1 #KillOnBadExit=0 #LaunchType=launch/slurm #Licenses=foo*4,bar #MailProg=/usr/bin/mail #MaxJobCount=5000 #MaxStepCount=40000 #MaxTasksPerNode=128 MpiDefault=none #MpiParams=ports=#-# #PluginDir= #PlugStackConfig= #PrivateData=jobs ProctrackType=proctrack/pgid #Prolog= #PrologFlags= #PrologSlurmctld= #PropagatePrioProcess=0 #PropagateResourceLimits= #PropagateResourceLimitsExcept= #RebootProgram= ReturnToService=1 #SallocDefaultCommand= SlurmctldPidFile=/var/run/slurm-llnl/slurmctld.pid SlurmctldPort=6817 SlurmdPidFile=/var/run/slurm-llnl/slurmd.pid SlurmdPort=6818 SlurmdSpoolDir=/var/lib/slurm-llnl/slurmd SlurmUser=slurm #SlurmdUser=root #SrunEpilog= #SrunProlog= StateSaveLocation=/var/lib/slurm-llnl/slurmctld SwitchType=switch/none #TaskEpilog= TaskPlugin=task/none #TaskPluginParam= #TaskProlog= #TopologyPlugin=topology/tree #TmpFS=/tmp #TrackWCKey=no #TreeWidth= #UnkillableStepProgram= #UsePAM=0 # # # TIMERS #BatchStartTimeout=10 #CompleteWait=0 #EpilogMsgTime=2000 #GetEnvTimeout=2 #HealthCheckInterval=0 #HealthCheckProgram= InactiveLimit=0 KillWait=30 #MessageTimeout=10 #ResvOverRun=0 MinJobAge=300 #OverTimeLimit=0 SlurmctldTimeout=120 SlurmdTimeout=300 #UnkillableStepTimeout=60 #VSizeFactor=0 Waittime=0 # # # SCHEDULING #DefMemPerCPU=0 FastSchedule=1 #MaxMemPerCPU=0 #SchedulerRootFilter=1 #SchedulerTimeSlice=30 SchedulerType=sched/backfill SchedulerPort=7321 SelectType=select/linear #SelectTypeParameters= # # # JOB PRIORITY #PriorityFlags= #PriorityType=priority/basic #PriorityDecayHalfLife= #PriorityCalcPeriod= #PriorityFavorSmall= #PriorityMaxAge= #PriorityUsageResetPeriod= #PriorityWeightAge= #PriorityWeightFairshare= #PriorityWeightJobSize= #PriorityWeightPartition= #PriorityWeightQOS= # # # LOGGING AND ACCOUNTING #AccountingStorageEnforce=0 #AccountingStorageHost= #AccountingStorageLoc= #AccountingStoragePass= #AccountingStoragePort= AccountingStorageType=accounting_storage/none #AccountingStorageUser= AccountingStoreJobComment=YES ClusterName=cluster #DebugFlags= #JobCompHost= #JobCompLoc= #JobCompPass= #JobCompPort= JobCompType=jobcomp/none #JobCompUser= #JobContainerType=job_container/none JobAcctGatherFrequency=30 JobAcctGatherType=jobacct_gather/none SlurmctldDebug=3 SlurmctldLogFile=/var/log/slurm-llnl/slurmctld.log SlurmdDebug=3 SlurmdLogFile=/var/log/slurm-llnl/slurmd.log #SlurmSchedLogFile= #SlurmSchedLogLevel= # # # POWER SAVE SUPPORT FOR IDLE NODES (optional) #SuspendProgram= #ResumeProgram= #SuspendTimeout= #ResumeTimeout= #ResumeRate= #SuspendExcNodes= #SuspendExcParts= #SuspendRate= #SuspendTime= # # # COMPUTE NODES NodeName=node[1-2] CPUs=4 Boards=1 SocketsPerBoard=1 CoresPerSocket=4 ThreadsPerCore=1 State=UNKNOWN PartitionName=debug Nodes=node[1-2] Default=YES MaxTime=INFINITE State=UP - Copy the configuration and paste it in

/etc/slurm-llnl/slurm.confsudo nano /etc/slurm-llnl/slurm.conf - Start the slurm controller node service and configure it to start at startup:

sudo systemctl enable slurmctld sudo systemctl restart slurmctld - You can now check your slurm installation is runnning and your cluster is Setup with the following commands:

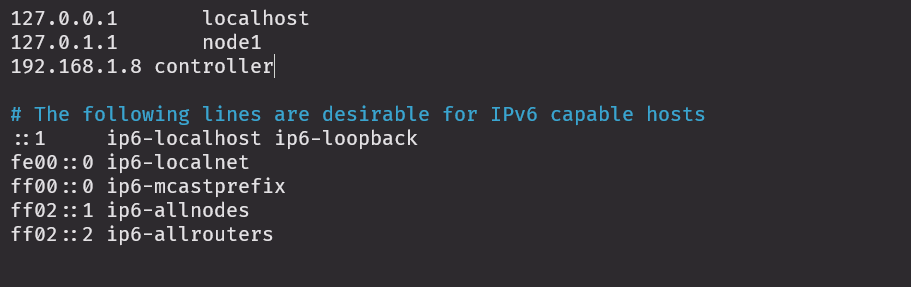

systemctl status slurmctld # returns status of slurm service sinfo # returns cluster information - Modify

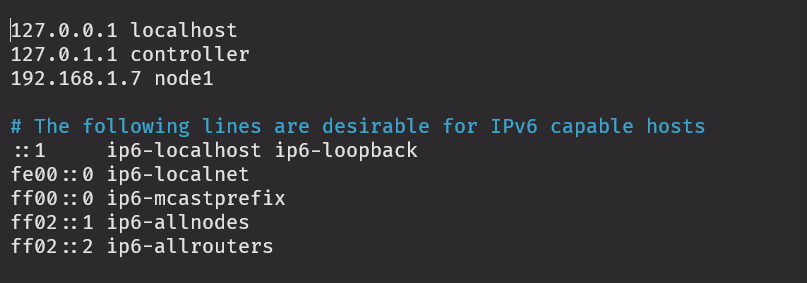

/etc/hostswith hostname of the worker node and its IP address, in my case it would be:

Setup worker node

- Do the exact steps 1-7 from setup controller node

- Replace the key by the key we have copied from controller node:

sudo cp /home/ubuntu/munge.key /etc/munge/munge.key - Setup the correct permissions:

sudo chown -R munge: /etc/munge/ /var/log/munge/ /var/lib/munge/ /run/munge/ sudo chmod 0700 /etc/munge/ /var/log/munge/ /var/lib/munge/ sudo chmod 0755 /run/munge/ sudo chmod 0700 /etc/munge/munge.key sudo chown -R munge: /etc/munge/munge.key - Restart the munge service and configure it to run at startup:

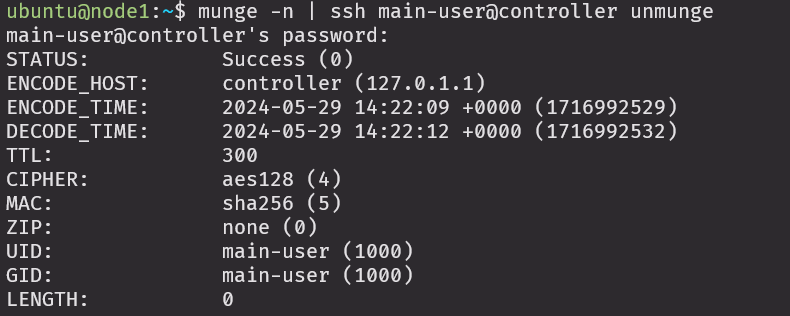

sudo systemctl enable munge sudo systemctl restart munge - Test the munge connection to the controller node:

munge -n | ssh <CONTROLLER_NODE> unmungeIf this is successful, you should see the munge status of the controller node like this:

-

Modify

/etc/hostswith hostname of the worker node and its IP address, in my case it would be:

- Copy the configuration from the one you generate for controller and paste it in

/etc/slurm-llnl/slurm.conf

Notice: the configuration should be the same in all nodes - Start the slurm worker node service and configure it to start at startup.

sudo systemctl enable slurmd sudo systemctl restart slurmd sudo scontrol update nodename=node1 state=idle - Then, we can verify slurm is Setup correctly and running like so:

sudo systemctl status slurmd sudo tail -f /var/log/slurm-llnl/slurmd.log

Testing

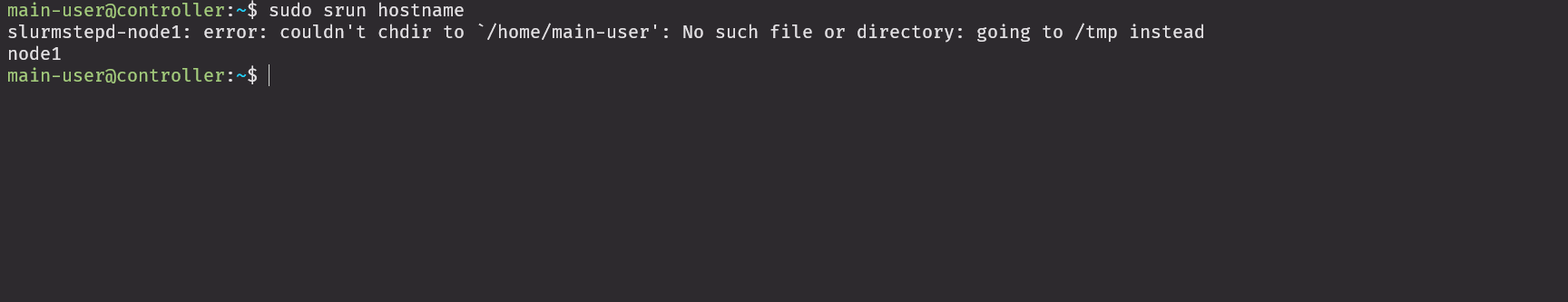

- Go to terminal in controller node, run:

srun hostnameIt should output like this: